Website Watchman manual

What follows is a full run-down of WebScraper's controls and features. The product home page and download are here.

Functionality

Watchman is 'Time Machine for your website'. It can crawl a website on schedule or manually, logging and archiving changes to pages or resources. It builds an archive, saving each page or resource that has changed, indexed so that it can display a page or export those files, exactly as they appeared on any given date.

In addition, it can monitor a single page, or focus on a specific part of that page, and alert you when there is a change.

If you are interested in a 'one shot' archive of a website, which is a common reason for people trying Watchman, then it can do this and you're welcome to use it that way. You can export an entire site after a single scan; you have the option of 'processing' the files so that they can be browsed offline, or 'preserving' them so that the files remain exactly as they were fetched. (See Archive browser tab > Export entire site below if you want to do this.)

But because that's not its primary purpose, it might not be obvious how to do that. For this reason, WebArch exists. It has the same engine as Watchman but a severely simple interface aimed at this one-shot crawl-and-archive.

Sites and Settings tab

These settings control the actual scan or crawl. If you want to scan an entire site (ie every page within the same domain) then you may be able to ignore this tab. There may be settings that you need to tweak in order to scan your site properly, or you may want to use the black / whitelisting to limit the scan to a particular section of the site.

Note that the engine has a 'down but not up' rule. ie if you start within a 'directory' such as peacockmedia.software/mac/webscraper then the scan will automatically only include pages within /webscraper.

Blacklist and whitelist rules allow you to set up rules to control the scope of the scan. You can set up a rule so that the scan will ignore links matching a certain string, or 'only follow' links matching a certain string. (There's no need to set up a rule if you want to limit your scan to a certain directory - see the note above about the 'down but not up' rule). The strings you enter are a 'partial match'. Not regex, although you can use certain characters such as * to mean 'any number of any character' and $ to mean 'at the end'.

Timeout and delays : Use these settings to 'throttle' or rate limit your crawl.

Threads controls how many simultaneous requests can be open. The default is 12 which will cause the scan to run pretty quickly if the server can cope. The maximum is 50 but in practice this control makes little difference past a certain point. Some servers may stop responding after a while if the rate is too high. Moving this slider to the far left will limit the scan to a single thread, ie each response is received and processed before the next is sent.

This is a crude control over the rate. It may be much better to use rate limiting (read on....)

Limit Requests to X per minute: The engine will be smarter if you use this control. It'll still use a small number of threads but will introduce calculated delays so that the overall number of requests doesn't exceed the number you set per minute.

Advanced scan options > Treat subdomains of root domain as internal allows you to decide whether to include subdomains, eg blog.peacockmedia.software in your scan

Advanced scan options > Ignore trailing slashes foo.com is considered the same as foo.com/ with this setting switched on. This avoids some duplication of pages in Watchman. Therefore this setting defaults to on. However, in a small number of sites, the trailing slash is needed and removing it leads to errors.

Advanced scan options > Ignore querystrings may be necessary in cases where spurious information in querystrings or specifically a session id are causing the scan to run on much longer than it should, or for ever.

Advanced scan options > Starting url contains page name without file extension If your urls include a page name with no file extension, eg mysite.com/mypage/, Watchman cannot know whether this is a directory or a page. If a directory, Watchman would limit its scan to the directory /mypage/. If it's a page then the scan would be limited to mysite.com. This situation is auto-detected, you should be asked the question if necessary, but you are able to manually change the setting here if it's wrong.

Advanced scan options > Render page (run js) If a page requires javascript to populate some or all content, it may display its 'noscript' text in browsers with javascript disabled and that may be what Scrutiny sees. If your site requires javascript to be switched on then Watchman can run javascript before scanning the page.

This can also be useful if your page contains dynamic content, ie the page loads in the browser and then loads content. In these cases, the page may have appeared to have been archived properly, but when viewing at a later date, new content is being displayed. The 'Render page' feature may 'fix' that content at the time when the page was archived.

The scan will be much slower and use more resources, so only use this option if you're absolutely sure that it's necessary.

Note that script will be executed that usually runs when the page loads, but Watchman can't perform user actions like clicking menus, or trawl through javascript searching for links.

Advanced scan options > Attempt authentication Watchman can check some sites which require authentication. Be aware that switching on this setting can damage your site including deleting your pages.

Yes, really. Some content management systems have buttons for managing pages, including deleting pages, which look like links to Watchman. I'm having to say this because it has happened in the past.

If you are going to 'attempt authentication', here are some precautions and good ideas:

- try to exclude such controls from being checked by using 'Don't check links containing'

- make sure you don't scan the 'admin' interface of your site

- log in using a user account with only 'reader' rights

- make sure your site is backed up and you are prepared to restore if the worst happens

- It's also important to blacklist (ie 'ignore') your logout link(s), eg set up a rule that says 'ignore urls containing logout' (or whatever).

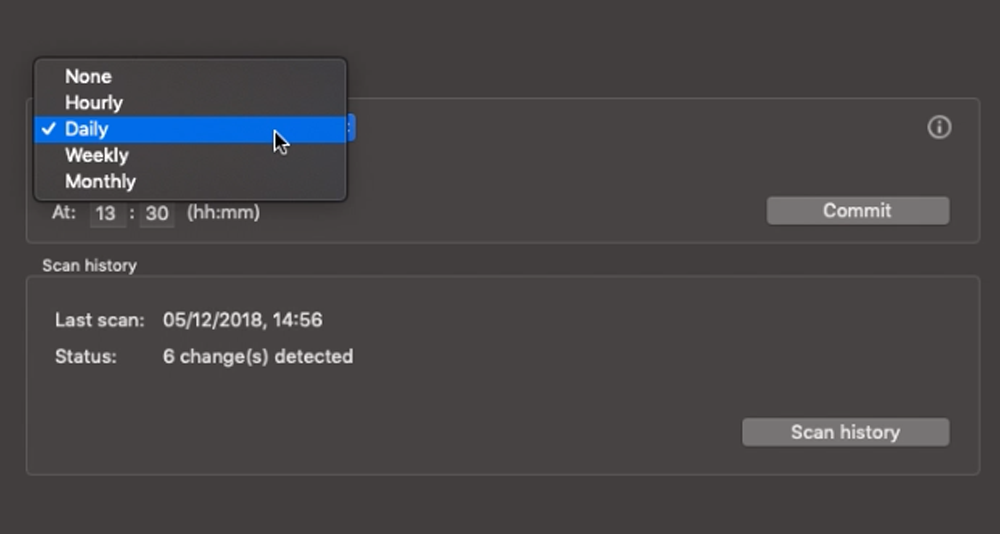

Schedule The app does not need to be running, it'll start up at the scheduled date/time, run the scan and then take any of the finish actions you've selected.

Since version 2.9.0, Watchman is capable of queueing your scheduled scans if they overlap. But try to space your schedules out if you can. It'll still only run one at a time.

Remember to press 'Commit' after making schedule changes, and this includes switching back to 'None'.

Filtering This comes into play after each page has been discovered and fetched. The filters in this dialog come into play before Watchman checks whether the page has changed since the last scan. So this is useful if you want to ignore any changes to the navigator / header / footer, for example. Or if you want an alert when a particular part of a page changes.

Alert options / Alert when.... This dialogue allows you to decide what changes Watchman alerts you to. For example you can specify source code or visible text. And you can define 'visible text' to include header, footer and nav tags, alt text. You can also choose to be alerted to changes to images, resources such as css and js, pdf documents or even the page's response code.

Note that unlike the Filter options, these options only affect when an alert is shown. An entry will still be made in the archive and change log for these things. To completely ignore certain changes, you must use the Filter dialogue.

Alert type You can choose whether you see a pop up alert (which also bounces the dock icon) or a notification centre banner. Or no alert at all (changes are still saved to the archive and logged). This button currently opens Preferences, which is where the Alert type buttons are.

Scan history gives you a simple overview of all scans made for this website. See the Changes tab for more details.

Changes tab

This is where you can see a list of changes since the last scan. Any pages or resources that have changed (depending on the Filter settings) will be listed here. The left and right arrow buttons allow you to switch the view to changes discovered during the previous scan, and then the scan before that.

The 'Reason' column will give a reason such as 'change to visible text' or 'change to source code'. These will obviously depend on which options are checked in 'Alert options' on the Sites and settings tab.

Where the reason is 'change to visible text' or 'change to source code' Watchman can display the text/code and highlight the changes, in a 2-pane 'before and after' window. Either right-click (or ctrl-click) the item in the table, or select it and use the 'Compare' button (top-right). Note that there is currently a limitation with this feature. If there is more than one change in the text/code and they appear a long way apart, a large block of text will be highlighted, ranging from the start of the first change to the end of the last.

Where the reason is an image or document that has changed, you can again view 'before' and 'after' side-by-side in the same way - right-click/ctrl-click or the 'Compare' button.

Archive browser tab

This is where you browse the archive for the site selected in the Sites and settings tab. It has a split view. The left side is a list of your pages. The right is like a web browser which will display the selected page as it appeared on the selected date.

Note that the list of pages is expandable. Below each page, you'll see a list of dates. These dates won't show every date that a scan was performed, only the dates that that page changed. You must select a date before you see anything in the browser.

If you have a lot of pages in the list, you can use the search box to find a particular url.Export page as... This button allows you to export the page that you're viewing. You can choose to export an image or a folder containing the files that make up that page. In the latter case the exported files will be exactly as they were fetched and have their original filenames.

Export entire site is a powerful feature. It allows you to export the entire site *as it appeared on a certain date*. Obviously if you've only made a single scan, then you'll only have one date to choose from. But Watchman is designed to repeatedly scan a site and build an archive.

Export entire site will export a 'slice' of the archive, relating to a specific date. First you have to choose a date. Instead of choosing a date, there's a shortcut button that will select the most recent scan. You also need to choose whether you want to 'process' the files so that they can be browsed in a web browser offline, or 'preserve' the files and export them exactly as they were fetched.

javascript enabled This button selects whether javascript is enabled in the browser pane. Some pages require this to be on in order to display properly.

Note that if your content only displays properly when javascript is enabled in the browser, you may find that your page is being generated dynamically. This means that whenever you view the archived page, you'll be seeing current content rather than historical content. To 'fix' the archived page, you may need to scan with Sites and settings > Render page (run js) switched on (so the page is rendered at scan time) and then leave 'javascript enabled' switched off in the browser.

Browser / source simply switches the archive browser between a web browser view and the source code view. In the latter case, you'll be seeing the source as it was fetched (it will probably be modified in the browser so that the page can be viewed offline, but you won't see that modified code.)

Preferences

Here you will find some global settings.

Alert type is described below Sites and settings.

Non-UI mode or 'headless' mode. This mode doesn't open a window when a scheduled scan starts, only a status bar icon/menu. This can be useful if you have scans scheduled to start at times when you're likely to be working and don't want the interruption of the app starting up.

User-agent string You can change the user-agent string to make Watchman appear to be a browser to the server (known as 'spoofing'). Choose one of the regular browsers from the drop-down menu or paste in one of your own.

Using the UA string of a mobile browser can be useful if the server serves a different version of the site to mobile browsers and you want to archive that version.

Exporting / directory urls default filename If the page has a url like https://peacockmedia.software/mac/scrutiny/ (where 'scrutiny' is a directory) then the page will be created in a directory called 'scrutiny' when it is exported. And it obviously needs to be saved locally with a filename. 'index.html' is the default but you can change that if you want to. Note that pages with an extension like .php will have .html added, because they're not php files any longer but fixed html.

Additional js rendering time This setting applies to the 'Render page / run js' site setting. If your page takes time to dynamically fetch content and display it, you may need to increase this setting to make sure that all of this is fully finished before Watchman grabs the rendered content.

Question? Don't be afraid to ask

PeacockMedia

PeacockMedia