Scrutiny 9 Per-site Settings and Options

More settings and options related to the scan

Within your site config listing, There are a few basic settings and options under 'Basic options' and there are many more under 'All settings and options'

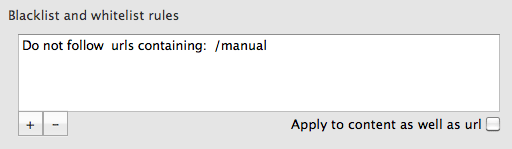

Rules - Ignore / Do not check / Do not follow

(Previously known as blacklists and whitelists)

In a nutshell, 'check' means ask the server for the status of that page without actually visiting the page. 'Follow' means visit that page and scrape the links off it.

Checking a link means sending a request and receiving a status code (200, 404, whatever). Scrutiny will check all of the links it finds on your starting page. If you've checked 'This page only' then it stops there.

Otherwise, it'll take each of those links it's found on your first page and 'follow' them. That means it'll request and load the content of the page, then trawl the content to find the links on that page. It adds all of the links it finds to its list and then goes through those checking them, and if appropriate, following them in turn.

Note that it won't 'follow' external links, because it would then be crawling someone else's site, which we don't want. It just needs to 'check' external links but not 'follow' them.

You can ask Scrutiny to ignore certain links, not check certain links or not to follow certain links. You can now also choose 'urls that contain' / 'urls that don't contain'

This works by typing a partial url. For example, if you want to only check pages on your site containing /manual/ you would choose 'Do not follow...', choose 'urls that don't contain' and type '/manual/' (without quotes). You don't necessarily need to know about pattern matching such as regex or wildcards, just type a part of the url.

Since v6.7 Scrutiny supports some pattern matching functionality to the rules. This is limited to * for 'any number of any characters' and $ meaning 'at the end', eg /*.php$

You can limit the crawl based on keywords or a phrase that appear in the content by checking that box.

You can highlight pages that are matched by the 'do not follow' or 'only follow' rules. This option is on the first tab of Preferences under Labels. (Flag blacklisted (or non-whitelisted) urls)

Limit crawl based on robots.txt

The robots.txt file at the root of your website gives instructions to bots; which directories or urls are disallowed. Scrutiny can observe this file if you choose.

If robots.txt contains separate instructions for different search engines, Scrutiny will observe instructions for Googlebot, or failing that, '*'

Ignore external links

This setting makes Scrutiny a little more efficient if you're only interested in internal links (if you're 'creating a sitemap, for example)

Don't follow 'nofollow' links

This specifically refers to the the 'rel = nofollow' attribute within a link, not the robots meta tag within the head of a page. With this setting on, links that are nofollow will be listed and checked, but not followed.

To check for nofollow in links, you can switch on a nofollow column in the 'by link' or 'flat view' links tables. With the column showing in either view, Scrutiny will check for the attribute in links and show Yes or No in the table column. (You can of course re-order the table columns by dragging and dropping).

Consider subdomains of the root domain internal

foo.com and m.foo.com are considered the same site as www.foo.com with this setting switched on.

Limiting Crawl

If your site is big enough or if Scrutiny hits some kind of loop (there are a number of reasons why any webcrawler can run into a recursive loop) it would eventually run out of resources. These limits exist to prevent Scrutiny running for ever, but they have quite high defaults.

By default it's set to stop at 200,000 links. Scrutiny will probably handle many more, but it's a useful safety valve and may stop the crawl in cases where Scrutiny has found a loop.

(Scrutiny now has low disc space detection to prevent a crash caused by this reason.) If that happens, then it'll be necessary to break down the site usng rules (blacklisting / whitelisting).

Besides the number of links found, you can also specify the number of 'levels' that Scrutiny will crawl (clicks from home).

These settings can be set 'per site' but the defaults for new configurations are set in Preferences

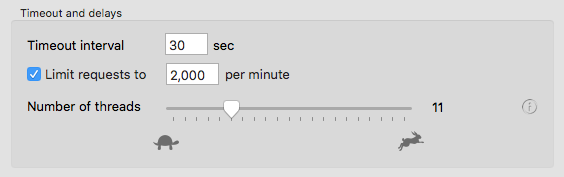

Number of threads

This slider sets the number of requests that Scrutiny can make at once. Using more threads may crawl your site faster, but it will use more of your computer's resources and your internet bandwidth, and also hit your website harder.

Using fewer will allow you to use your computer while the crawl is going on with the minimum disruption.

The default is 12, minimum is one and maximum is 40. Experiment to find the optimum crawl speed with the slide half way.

Beware - your site may start to give timeouts / errors or other problems if you have this setting too high. In some cases, too many threads may stop the server from responding or responding to your IP. If moving the number of threads to the minimum doesn't cure this problem, see 'Timeout and Rate limiting' below.

Timeout and Rate limiting

If you're getting timeouts you should first reduce the number of threads you're using.

Your server may not respond to many simultaneous requests - it may have trouble coping or may deliberately stop responding if being bombarded from the same IP. If you get many timeouts at the same time, there are a couple of things you can do. First of all, move the number of threads to the extreme left, then Scrutiny will send one request at a time, and process the result before sending the next. This alone may work.

If not, then you can now specify the maximum number of requests that Scrutiny makes per minute.

You don't need to do any maths; it's not 'per thread'. Scrutiny will calculate things according to the number of threads you've set (and using a few threads will help to keep things running smoothly). It will reduce the number of threads if appropriate for your specified maximum requests.

If your server is simply being slow to respond or your connection is busy, you can increase the timeout (in seconds).

Check for broken images

(Now on by default.) The link check will also check for broken images (<img src=...) Their alt text will be displayed in place of link text in the links reports.

Check for broken linked js and css files

Linked resources (<link rel=...) will also be checked and reported with the link check results.

Highlight missing link url

When a website is being developed, link targets may sometimes be left blank or a placeholder such as # used. (<a href = "" ) If you'd like to find such empty link targets, switch this setting on and they'll be highlighted as if they were bad links. With the setting off, they will be ignored.

Check form actions

Extracts the submision url (action) of a form (<form action="...") and tests it. Beware, no fields will be sent, so if any form validation is carried out, this test may return some kind of error.

Archive pages while crawling

When Scrutiny crawls the site, it has to pull in the html code for each page in order to find the links. With the archive option switched on, it simply dumps the html as a file in a location that you specify at the end of the crawl.

Since v6.3, the archive functionality is enhanced. (Integrity Plus and Scrutiny only, not Integrity.) You have the additional option for Scrutiny to process the files so that the archive can be viewed in a browser (in a sitesucker-type way) This option appears within a panel of options accessed from the 'options' button beside the Archive checkbox. In Scrutiny the options also appear in the Save dialog (if shown) at the end of the crawl.

The archiving functionality is still fairly basic. For Much better scheduled archiving / monitoring, see Website Watchman.

Spelling & grammar

Check spelling and grammar of a page's content during the scan. This takes time and resources (particularly grammar) so don't switch on unless you want spell-check or grammar results. Spell checking isn't too much of an overhead but grammar really is, especially if there's a significant amount of text on your page. The languages selector offers languages installed on your computer, and standard MacOS functionality is used for the checks. Scrutiny will use your existing custom dictionary (learned words) if it exists.

Keyword analysis

With this setting switched on, a certain amount of keyword analysis is done while the scan is running. With this switched on, in the SEO results you'll be able to see occurrences in the content of keywords that you type into the search box (the count will appear in the 'content column). The keyword-stuffed page report also relies on this setting being switched on.

For more information, see Tools : Keyword analysis

Check links within PDF documents

This takes time and resources because the content of pdf documents needs to be downloaded (and pdfs can sometimes be large files), but the links within those documents can then be reported, checked and followed, just as if the pdf document is a page on your site.